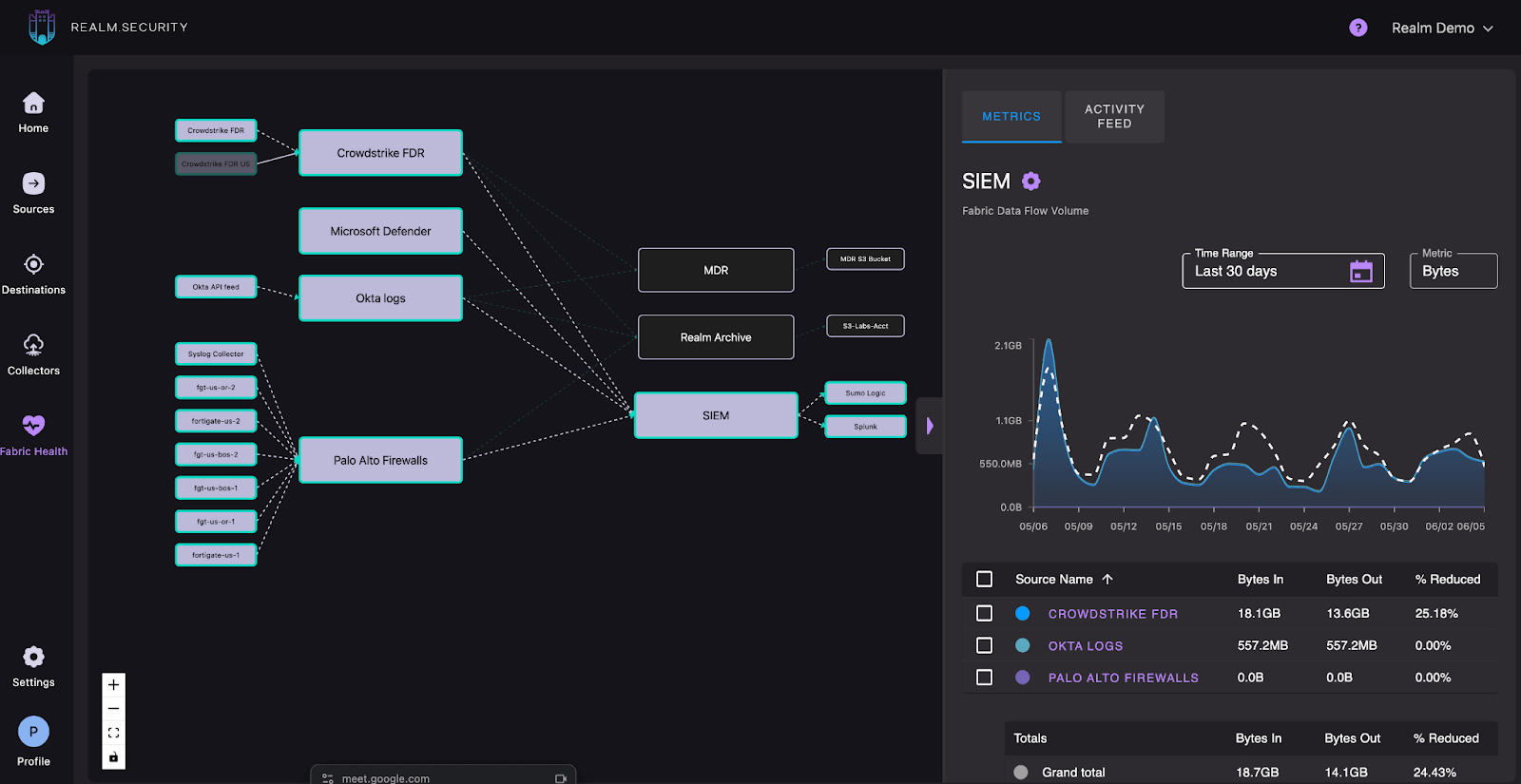

Do you have all the security-relevant data in your SIEM? The traditional approach of sending all logs to a Security Information and Event Management (SIEM) system is proving to be financially unfeasible for many organizations, often forcing a difficult choice between comprehensive visibility and budgetary constraints. Security teams are constantly faced with a visibility trade-off, having to decide between ingesting all logs for complete insight or selectively choosing data to control costs, potentially leaving blind spots.

The Cost-Visibility Conundrum in Security Log Management

Managing security logs effectively has become a complex balancing act between maintaining comprehensive visibility and controlling escalating costs. Storing this data from endpoints, firewalls, and networks leads to high-cost active security tools like SIEMs and XDRs, pushing budgets to their limits.

This financial pressure often compels security teams to make strategic decisions about where to store their data, whether in high-cost, active storage ("hot storage") or lower-cost, secondary archives like data lakes ("cold storage"). While data lakes offer a budget-friendly alternative for retaining large quantities of logs, they present a significant challenge: the difficulty and expense of quickly rerouting security-relevant data back into active monitoring systems when an incident demands deeper investigation. This inherent friction can lead to critical logs being inaccessible or delayed, hindering timely investigations and increasing the mean time to detect (MTTD) threats.

Consider, for instance, CrowdStrike heartbeat data. While regular heartbeats indicate a healthy system, storing every single heartbeat for thousands of endpoints can accumulate significant costs. In the event of a ransomware attack, an analyst might urgently need to examine historical heartbeat data to reconstruct the sequence of events. The core problem lies not in the initial storage choice, but in the cost and complexity of efficiently retrieving and re-ingesting precisely the relevant historical data from any archive when an incident unfolds, without being overwhelmed by noise or incurring unexpected expenses.

The Quest for Simplicity: Beyond Parsers and Formats

Security teams crave simplicity. The current landscape often requires deep expertise in parsers, data formats, and complex scripting to manage log ingestion and routing, which can be overwhelming for resource-constrained teams. The ideal solution would allow teams to "plug it in and it works," enabling them to focus on threat detection and response rather than on managing intricate data pipelines.

Foundational Principles for the Next Generation of Security Data Pipeline Platforms

The next generation of security data pipeline platforms is purpose-built to solve the fundamental challenge of getting the right security data to the right place, at the right time, without breaking the bank or sacrificing visibility. These platforms are specifically designed for security logs, ensuring routing decisions are driven by business relevance, not technical hurdles.

How Next-Gen Security Pipeline Platforms Deliver Simplicity and Control

These advanced platforms are the answer to the complexities of managing security logs. They simplify your entire security data flow, ensuring that routing decisions are driven by what's most relevant to your business, not by tedious parser complexities or rigid infrastructure constraints.

Here's what to look for:

By leveraging these foundational principles in a security data pipeline platform, you're not just optimizing your data storage; you're transforming your security operations to be more efficient, cost-effective , and ultimately, more secure.

Ready to see how a next-generation security data pipeline platform can transform your operations? Request a demo of Realm.Security today!