Security teams today face a daunting challenge: an explosion of security data and skyrocketing SIEM costs. As organizations deploy more firewalls, endpoint agents, cloud services, and network sensors, the daily volume of logs has surged. In a modern SOC, it’s not uncommon to field thousands of security alerts per day, with some teams reporting over 100,000 alerts daily. Yet as much as 67% of alerts are never investigated, often because analysts are drowning in low-value noise. All this data comes at a price, literally. SIEM and XDR platforms that charge by ingestion volume are straining budgets. Even a moderate deployment pulling in ~100 GB of logs daily can incur around $150,000 per year in SIEM licensing fees. And with average log volumes growing about 50% year-over-year, those costs (and the data overload) will continue to rise.

In our first post, we introduced the concept of a security data fabric as a smarter, more efficient way to handle all that telemetry without drowning in cost or complexity. Now, in this blog, we’re going hands-on.

This post is for the engineers in the trenches. We’ll walk through how to look at your data sources, understand where your logs are going, and estimate how much value (and cost) each destination delivers. You'll come away with a practical framework for cutting SIEM costs without losing the visibility your team depends on.

Let’s dig in.

The SIEM Cost Crunch and Why Log Volume Management Matters

For many enterprises, SIEM cost optimization has become a pressing issue. Traditional SIEMs charge based on data volume (GB per day or events per second), which means the more logs you ingest, the more you pay. As security data expands, budgets can spiral out of control. Enterprises ingesting terabytes of data daily often spend millions of dollars yearly on SIEM tools. This creates a painful trade-off: pay exorbitant fees or limit what you send to the SIEM (potentially missing valid data).

Why are we collecting so much data in the first place? The truth is that not all logs are equally helpful. In practice, many collected logs provide little to no detection value. Organizations often ingest everything by default, and the firewall allows and denies every authentication attempt, system debug messages, and “just in case.” Over time, this leads to data bloat: huge volumes of events stored in an expensive SIEM, even though perhaps 90% of that data is routine noise. The outcome is both financial and operational inefficiency. You’re paying to store mountains of irrelevant data, and your analysts are wading through noise to find the real threats.

This is where log volume management becomes critical. You can drastically cut costs and noise without losing essential visibility by intelligently culling the deluge of events. For example, filtering out redundant or low-risk logs can shrink SIEM ingest volumes dramatically. Many organizations report 40–70% reductions in log volume sent to high-cost platforms without losing visibility. In other words, you can see the signal instead of the noise, and pay only for the data that truly matters.

Effective log management doesn’t mean throwing data away indiscriminately. It means understanding your data, where it comes from, who needs to use it, and how long it needs to be kept, and then making informed decisions about where each stream of data should live. This brings us to the concept of a security data fabric as the architectural answer to the SIEM cost crunch.

Understanding Your Data Sources and Destinations

To get a handle on log costs, start by mapping out your data sources and data destinations. In a typical enterprise security stack, you might have sources such as:

Each of these sources generates a firehose of events. Now, consider your data destinations, the platforms or tools where this data ends up for analysis or retention. Common destinations include:

The key insight is that not every destination needs every log. Different teams and tools have distinct data needs. Here’s how to think about routing logs with intention:

For Your SIEM: Prioritize High-Fidelity, Real-Time Security Events

The SIEM is your central detection engine—but it's also your most expensive destination. Limit what you send to:

Avoid sending:

Pro Tip:

Review the top 10 log types by volume in your SIEM. They likely don't belong there if they don’t align with a detection rule or a runbook.

For Your Data Lake: Offload Full-Fidelity Logs for On-Demand Analysis

Data lakes are ideal for:

They’re cheaper and more flexible than a SIEM, but lack real-time correlation. Use them for depth, not speed.

Pro Tip: Route “noisy but contextual” logs like DNS, DHCP, and NetFlow to your data lake only. At ingest, normalize everything to a consistent schema like OCSF (Open Cybersecurity Schema Framework). This ensures you can correlate and search across all your logs later with consistent, high-quality results, even if they weren’t used in real-time detection.

For Your MDR or MSSP: Send Only Enriched, Actionable Alerts

Managed services don’t want to sift through raw telemetry. Give them:

Avoid sending:

Pro Tip: Set up forwarding rules to send only alerts tagged as “high,” “critical,” or “needs review.” Before flipping the switch, double-check with your MDR what data they actually ingest and respond to. Assumptions here can be expensive.

Just as important: inspect outgoing logs for sensitive data like PII or PHI. Accidentally sharing regulated information with an external vendor could violate customer agreements or internal compliance standards.

For Compliance and IT Teams: Archive What’s Required, Access When Needed

Retention and audit teams care about completeness, not speed. Place these logs in low-cost cloud storage:

Avoid routing to live SIEM unless there’s an active policy requiring real-time visibility.

Pro Tip: Automate archiving rules that move logs to cold storage after 30–90 days. If auditors only request data quarterly, don’t pay for 24/7 access.

For Your XDR: Avoid Duplicating What It Already Collects

Many XDR tools natively collect endpoint and identity telemetry. If that’s the case:

Pro Tip: Audit your XDR connectors. If it’s already collecting from a log source directly, disable redundant forwarding to the SIEM to avoid double ingestion fees.

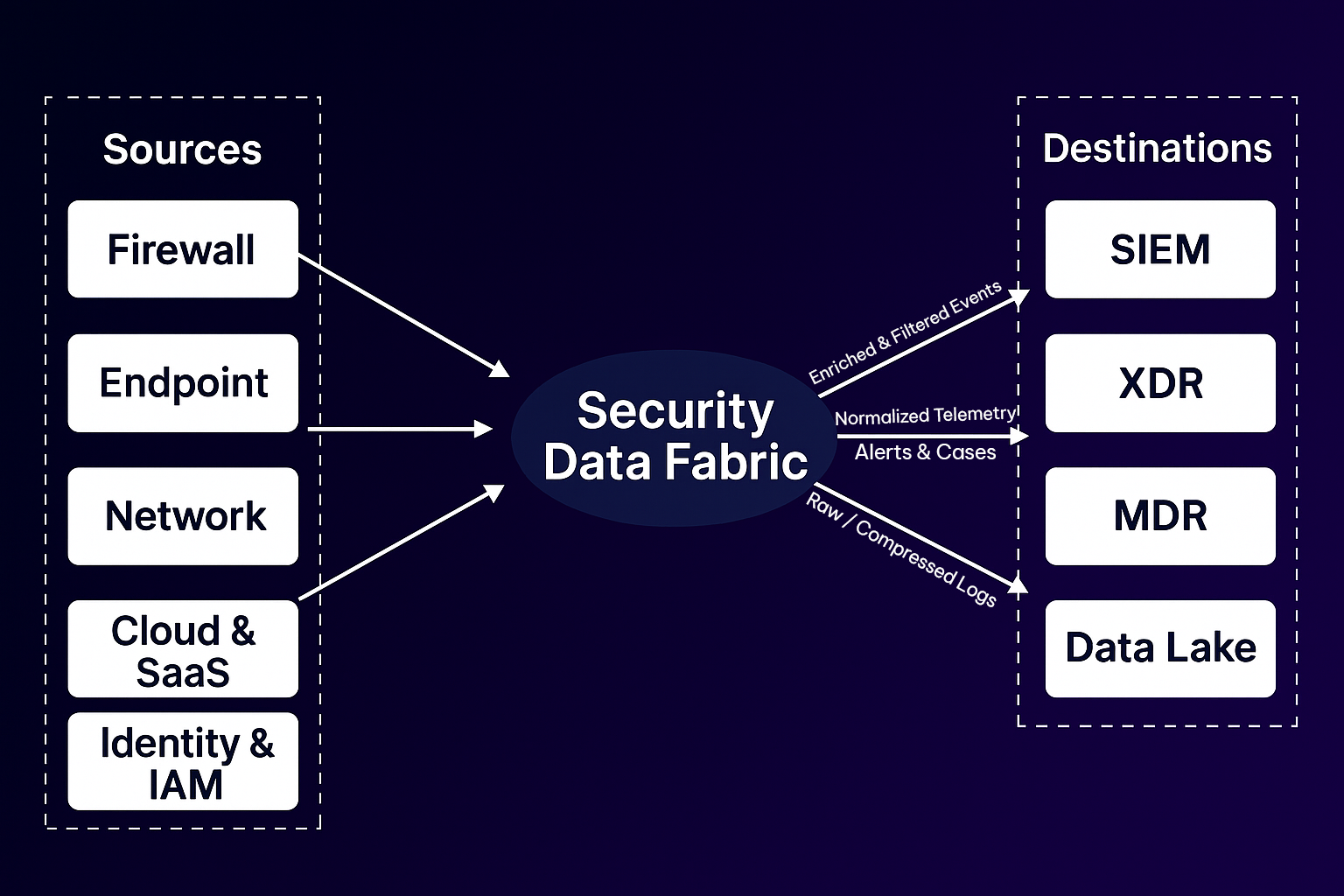

Security Data Fabric Architecture: Mapping Sources to Destinations

Once you know your sources and destinations, the next step is implementing a smart traffic cop in your architecture, that’s the role of a security data fabric. A security data fabric is essentially a unified data pipeline purpose-built for security, sitting between all those data sources and all your destinations. Its job is to centralize control over what data goes where, in what format, and at what frequency. Instead of every tool ingesting directly from each source (creating a tangle of feeds and lots of duplicate or irrelevant data in expensive places), the fabric acts as an intelligent broker.

In the diagram above, notice how the Security Data Fabric ingests from all sources, but then makes decisions on where to send each piece of data:

This architecture provides a few significant advantages:

1. Cost Control:

You can dramatically reduce the volume ingested into high-cost tools by filtering out redundancies and low-value events. (For example, you might drop verbose debug logs or index only summaries of high-frequency events.) Only the data that’s worth the cost makes it to the SIEM.

2. Noise Reduction:

Curating the data before it hits your analysts’ consoles reduces alert fatigue. The fabric might suppress or deduplicate noisy events so the SIEM doesn’t repeatedly trigger on the same benign activity.

3. Flexibility:

A fabric decouples sources from destinations. Want to send firewall logs to a new analytics tool or split off a feed for a fraud detection team? The fabric can do that without touching the firewall or burdening the SIEM. It also eases migrations. If you switch SIEM vendors or add an XDR, you don’t need to re-engineer dozens of integrations; the fabric layer redirects the data flow.

4. Centralized Management:

Instead of maintaining log collection scripts on every system or custom ETL jobs for each destination, you manage data routing in one place. This is far easier to maintain and govern. (It’s worth noting: organizations that try to build this in-house often find it “scales poorly, is error-prone, and hard to maintain” without dedicated engineering effort.)

In essence, the security data fabric gives you a single pane of glass for your security telemetry. You regain control by deciding which logs go to the SIEM, which can be shunted to cheaper storage, and which might not need to be collected at all. The result is the ability to own your data strategy instead of letting the SIEM licensing model dictate what you can afford to monitor.

Practical Steps to Right-Size Your Logging (and Budget)

Adopting a security data fabric approach starts with some practical groundwork. Here are key steps and considerations for engineers to begin log volume management and cost reduction:

1. Measure Your Daily Log Volume

Start by establishing a baseline. Use your SIEM's dashboards or license usage reports to determine how much data is ingested daily. Break it down by source wherever possible (e.g., firewalls: 30%, EDR: 25%, cloud logs: 20%, etc.). This will help you identify where your most significant data contributors are.

Also, take note of ingestion spikes and anomalies. Are there sources that suddenly generate large volumes during patch cycles, reboots, or outages? These patterns are key to optimizing later.

Pro Tip:

Export 7–14 days of ingestion metrics by source and load them into a spreadsheet. Add a column to estimate the daily cost per source based on your SIEM's pricing model. This quick exercise often highlights low-value, high-cost sources hiding in plain sight.

Once you’ve built that baseline, set up real-time alerts for dramatic fluctuations. Spikes might indicate misconfigurations, malicious activity, or even downstream issues with detection workflows. They’re also crucial for maintaining SLA performance if your team is responsible for fast response times.

2. Calculate Your Ingestion Costs

Translate your log volume into dollars. Calculate your monthly and annual spend per source category if your SIEM or XDR tool charges by GB/day. Even if your vendor offers “ingestion tiers,” you can estimate overage or burst costs from recent peaks.

This is also a good moment to review storage and retention charges. Some SIEMs charge separately for historical searches or “hot” vs “cold” storage tiers.

Pro Tip:

If your SIEM doesn't expose cost-per-source breakdowns, create one manually using estimated volume x cost per GB. Present this in your next budget review to highlight optimization opportunities and justify routing changes.

3. Identify High-Volume, Low-Value Logs

Now it's time to separate signal from noise. Engage with SOC analysts, threat hunters, and IR teams to understand which logs are used for detection, investigation, or compliance, and which ones aren’t.

Common culprits for noise:

Look for logs that are high-frequency but low-fidelity. These often contribute little to detection efficacy but drive up ingest costs.

Pro Tip:

Pull a sample day’s logs and sort them by event count. Then tag each log type as Critical, Useful, or Unused. Use this to prioritize what can be filtered, summarized, or offloaded to cheaper storage.

4. Reallocate Logs to the Right Destinations

This is where cost savings really materialize. Once you’ve mapped value and volume, reroute logs accordingly:

Avoid duplicating data across destinations unless necessary. For example, if your XDR already ingests EDR logs, you may not need to send them to your SIEM too.

Pro Tip:

Create a routing matrix that shows each log source and where its data should go. Then, start implementing changes one source at a time. Start with low-risk sources like the firewall or internal DNS.

5. Tune Retention and Granularity

You don't need to keep every log forever. Set different retention windows for each destination:

Also consider adjusting the granularity of logs, summarizing repetitive entries, dropping debug-level messages, or storing only metadata for high-volume sources like DHCP.

Pro Tip:

Automate log tiering: Set up rules that move logs from your SIEM to cold storage after 30 days, or store full logs for 7 days and summaries afterward. Most data fabric platforms can orchestrate this without manual intervention.

Collaborate with stakeholders, your SOC analysts, compliance officers, IT admins, and external partners throughout these steps. Make sure the new data routing still meets each group’s needs. Done correctly, you’ll maintain or even improve everyone’s visibility while significantly cutting costs. It’s common to find that after tuning, your SIEM might be ingesting only half the daily volume it used to, focusing on the most valuable 30–50% of data. The rest is handled by cheaper layers or not at all, immediately reflecting reduced licensing usage.

Conclusion: Spend Smarter on Security Data

In the face of relentlessly growing log volumes and tightening budgets, taking control of your security data pipeline is one of the smartest and most impactful moves a security team can make. Organizations can dramatically reduce SIEM costs by adopting a security data fabric architecture and practicing disciplined log volume management while improving visibility, agility, and operational resilience.

The key is simple: send the right data to the right place. High-fidelity, time-sensitive logs belong in high-performance tools like your SIEM or XDR. Everything else, contextual logs, compliance data, and archival records, can be filtered, summarized, or rerouted to lower-cost destinations without sacrificing coverage.

Teams that embrace this approach are already seeing the benefits:

And they’re doing it without making trade-offs on visibility or compliance. A well-architected security data fabric provides the flexibility to adapt, the control to enforce, and the intelligence to optimize, so you're not held hostage by log volume growth or vendor pricing models.

At Realm.Security, we designed our platform specifically for this challenge. We believe security engineers shouldn’t need professional services, brittle regex scripts, or six-month deployments to manage their data. Our platform puts you back in control, enabling you to filter, route, and optimize log flows in minutes, not months.

If you're ready to reduce SIEM costs and reclaim control over your security data architecture, let’s talk. Schedule a demo today!